Artificial Intelligence (AI) has emerged as one of the defining phenomena of the 21st century, with promises of revolutionizing healthcare, transportation, education, finance, and virtually every other sector. Headlines proclaim AI systems that surpass human experts, automate jobs, and even mimic human writing and creativity. Yet, beyond the surface-level fascination lies a troubling question: are we collectively misinterpreting what AI truly represents? Are we part of a mass-delusion event?

TLDR: Modern AI systems, while impressive in limited contexts, are often portrayed with unrealistic expectations. The belief that AI possesses understanding or consciousness is a widespread misconception fueled by media hype and corporate interests. Many AI limitations are glossed over, leading to misguided policies and misallocated resources. By detaching myth from reality, we can more responsibly assess what AI can—and cannot—achieve.

What Is Artificial Intelligence—Really?

The term “Artificial Intelligence” itself is both powerful and misleading. Originally coined to describe machines that could perform tasks considered to require human intelligence, it now serves as a convenient umbrella term. However, much of what we call “AI” today is simply data processing at scale, orchestrated through statistical models built on massive datasets. These systems don’t understand, think, or possess awareness.

Such systems operate based on patterns and probabilities, not genuine comprehension. Language models like ChatGPT or image generators like Midjourney are trained on enormous datasets and can produce text or images that seem ‘intelligent’, but this is largely mimicry without meaning.

The Hype Machine: Who Benefits?

AI’s exaggerated capabilities didn’t emerge in a vacuum. A convergence of tech corporations, media outlets, venture capital, and public relations campaigns has supported a narrative that AI is not just a tool, but a revolutionary force that will redefine society. But why?

Corporations want to showcase cutting-edge innovation to boost stock prices and attract investors. Startups pump up AI buzz to obtain funding. Governments invest in AI supremacy for geopolitical reasons. Even academics might overstate the promise of AI to secure grants or press coverage.

This creates a self-reinforcing cycle: hyped claims fuel investment, investment fuels more research and development, and the loop continues—often detached from grounded realities.

The Misconception of Understanding

One of the most pervasive illusions is that AI ‘understands’ the content it creates or processes. In reality, AI systems operate using statistical associations between words or images. Language models, for instance, predict the next word based on prior context—nothing more. There’s no awareness, intention, or comprehension behind these outputs.

As philosopher John Searle argued in his Chinese Room thought experiment, syntactic manipulation of symbols does not equate to semantic understanding. Applying this to AI, no matter how humanlike a chatbot’s responses appear, it remains fundamentally void of consciousness or meaning.

Cases of Overpromise and Underdelivery

AI history is littered with examples where bold forecasts failed to materialize:

- Autonomous vehicles: Despite years of testing and billions in investment, fully autonomous cars remain impractical and unsafe in most real-world conditions.

- AI diagnosis in healthcare: Initially heralded as superior to human doctors, many AI diagnostic tools have shown significant bias, poor generalizability, or failed under scrutiny.

- AI-driven education platforms: Promised to revolutionize learning, yet many have only marginally improved existing systems, with limited evidence of long-term benefits.

These examples aren’t isolated—it’s a pattern. Underestimation of complexity leads to systems that overpromise in theory but underdeliver in practice.

The Pattern of Magical Thinking

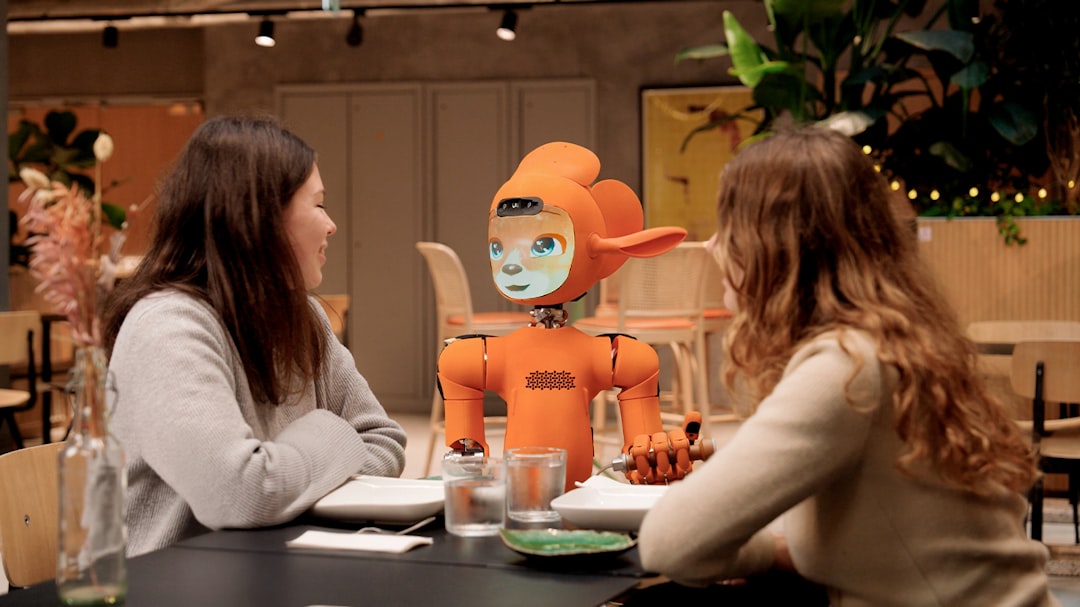

Societies have long experienced waves of optimism around new technologies—from electricity to nuclear power to the internet. But AI carries a curious distinction: the tendency for people to attribute human-like qualities and intelligence to an algorithm.

This reflects a form of technological animism, where people ascribe sentience and reasoning to inanimate code. This isn’t entirely irrational—AI interfaces are often designed to appear conversational or empathetic. However, it’s a design trick, not a functional reality.

The Illusion of Progress Through Metrics

One critical flaw in evaluating AI’s capabilities lies in usage of narrow benchmarks. For instance, success in beating humans at games like Go or chess does not equate to general intelligence. AI can outperform humans in constrained environments with clear rules and objectives—but falters in dynamic, ambiguous real-world situations.

Metrics such as “accuracy,” “speed,” or “perplexity” can suggest impressive performance without accounting for context, fairness, or unintended consequences. By focusing on what can be measured, we may ignore what truly matters: ethical use, long-term societal impact, and transparency in decision-making.

AI’s Hidden Costs and Ethical Risks

Behind the scenes, modern AI systems are resource-intensive and labor-reliant. Training large models consumes enormous energy, contributing to environmental harm. Moreover, these systems often rely on poorly paid labor—such as content moderators or data labelers—to function.

Key ethical concerns include:

- Bias and discrimination: AI models often reproduce and amplify societal prejudices embedded in their training data.

- Job displacement: Automation is replacing skilled and unskilled labor alike, often without viable transition plans.

- Lack of accountability: AI decisions can be opaque, with no clear chain of responsibility.

- Surveillance and control: Governments and corporations have used AI for mass surveillance, impacting civil liberties.

Rather than being democratizing, AI may in fact reinforce existing inequities if left unchecked.

Rethinking Intelligence and Agency

The AI-as-super-intelligence narrative is also rooted in a narrow definition of intelligence. Human intelligence is deeply contextual—connected to emotion, intent, culture, and history. Machines, however advanced, operate on fundamentally different principles.

By equating pattern recognition with reasoning, we devalue the complexities of human cognition. Critical thinkers argue for a more nuanced view—one that situates AI as a set of tools, not sentient entities. Intelligence, they contend, should not be reduced to computation alone.

Why the Mass Delusion Persists

The persistence of the AI myth stems from a blend of economic incentive, psychological bias, media spectacle, and genuine technological wonder. But perhaps most crucially, it reflects a societal desire for easy solutions to complex problems. Automation promises efficiency, simplicity, even objectivity—but these are rarely delivered without cost.

Historically, mass delusions have occurred when belief surpasses evidence. Tulip mania, the dot-com bubble, and even 20th-century eugenics movements were propelled by belief systems dressed up in science. AI, while based on legitimate advances, carries the same risk when adopted uncritically.

Conclusion: The Path to Realism

Artificial Intelligence is not a hoax—but the belief that it is autonomous, intelligent, or revolutionary in a human-like way is a delusion that must be addressed. Rather than attributing mystical power to AI tools, we should focus on their actual capacities, limitations, and impacts.

To move forward responsibly, we need:

- Transparent reporting on AI limitations and failures

- Independent regulation and critical oversight

- Ethical standards and human-centered design

- Public education that demystifies AI concepts

By grounding our understanding in evidence rather than allure, we can ensure that AI serves human ends—not the other way around. The aim is not to oppose AI, but to soberly scrutinize it, resisting delusion in favor of discernment.